Houston couple duped out of $5K in Artificial Intelligence cloning of their son’s voice pretending to call his parents in trouble and urgently needing funds.

A Houston, Texas couple were scammed out of $5K by thieves who used artificial intelligence to make their voice sound like their son’s.

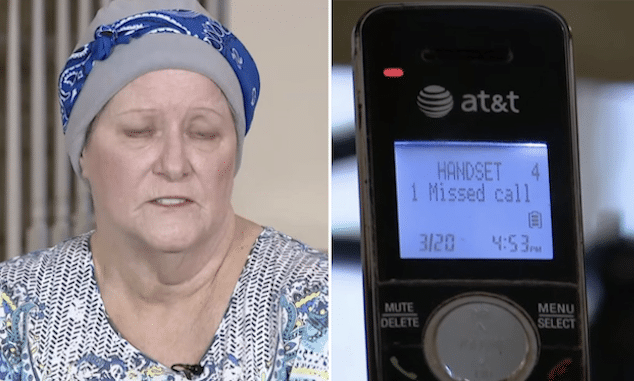

Fred and Kathy were duped after scammers called the family pretending to be their son, claiming they had been involved in a car crash involving a ‘badly injured’ pregnant woman and the son – who was now supposedly in jail with a broken nose on charges of DWI and needed urgent funds. The parents believing their son was in danger, did not hesitate to send off funds.

‘This is a serious situation. He did hit a lady that was six months pregnant,’ Kathy told KHOU of being told by an individual who at the time she believed to be her son. ‘It was going to be a high-profile case and she did lose the baby.’

‘I could have sworn I was talking to my son. We had a conversation,’ Kathy told the media outlet.

The thieves told the parents they needed $15,000 to bail their son out of prison and they took the situation so seriously that Kathy had to push back chemotherapy for her cancer.

Artificial Intelligence used to create fake realities

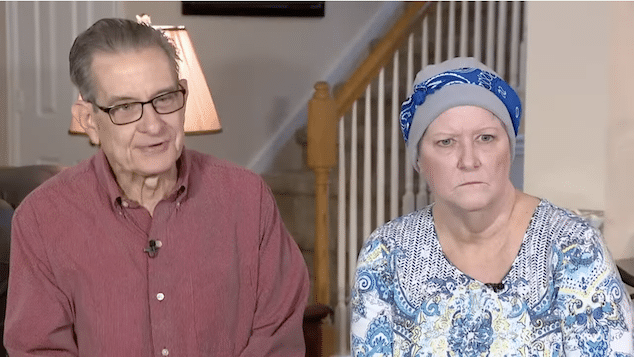

Fred and Kathy (who declined to share their last names) said they are now telling their story in the hopes that it might prevent someone else from finding themselves in a similar and growingly common situation.

Authorities now believed it was most likely artificial intelligence was used used to emulate their son’s voice.

During the ruse, the parents were told by the scammer that they needed $15K to make bail, with the number eventually lowered to $5K. The scammers even offered to come and pick the money up to expedite their son’s release.

It wasn’t until after the money had already been handed over that Fred and Kathy realized they had been tricked; the couple’s son had been at work the whole time.

Shockingly, one forensics expert said not only are situations of vocal cloning becoming common, but it’s not even that difficult for scammers to achieve.

‘They actually don’t need as much as you think,’ said Eric Devlin with Lone Star Forensics.

‘They can get it from different sources – from Facebook, from videos that you have public, Instagram, anything you publish,’ Devlin continued.

To all my friends in Congress — especially @SenSchumer — thank you!

Once again, you’ve kept up your side of the bargain by holding up new laws that would hold Big Tech companies like mine accountable. #FakeZuck pic.twitter.com/8zXm5gNNiP

— Fake Zuck (@DeepFakeZuck) November 29, 2022

Deepfake videos arriving on social media

Fred and Kathy are now using their story to help protect others.

‘I mean we scrounged together the $5,000, but the next person could give them the last cent that they own,’ Kathy said.

Instances of artificial intelligence stirring up trouble on the internet and in real life have become commonplace in recent months and even some of the most notable names have not been immune.

In February, a deepfake video of Joe Rogan promoting a libido booster for men went viral on TikTok with many online calling it ‘eerily real.’

At the time, the video caused a major wave of fear over worries that it could spark serious scams and waves of misinformation being spread.

Many Twitter users in February noted that it is illegal to recreate someone with artificial intelligence to promote a product.

In 2022, a deepfake video ad was released that appeared to be Meta CEO Mark Zuckerberg in which the tech hauncho is heard thanking democrats for their ‘service and inaction’ on antitrust legislation.